jsDelivr May outage postmortem

During the night, on May 2, 2024, the jsDelivr CDN domain cdn.jsdelivr.net started serving an expired SSL certificate to clients connecting from certain regions.

The outage lasted for more than 5 hours and affected users mostly in

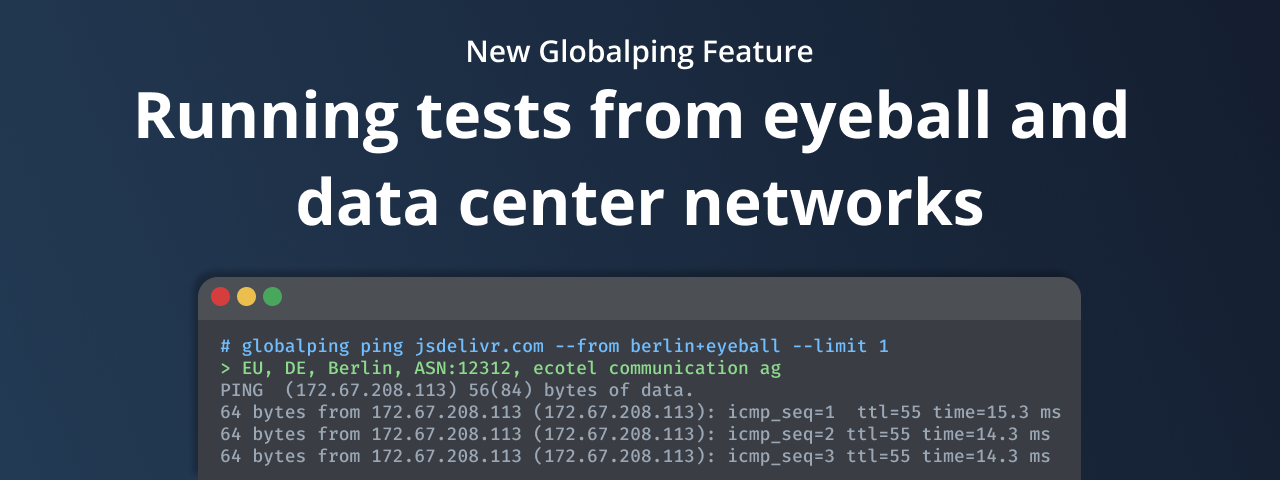

Read the full post »New Globalping feature: Eyeball and data center network tags

Today, we're happy to announce the release of a new Globalping feature that has been highly requested by our community: the ability to run network measurement commands from probes within eyeball or data center networks! You can combine these new

Read the full post »Joining forces with Algolia for an even better npm search

Utilized by jsDelivr, Yarn, CodeSandbox, and several other open-source projects, Algolia’s npm search has been an essential part of the user experience for developers searching for npm packages for almost seven years now. Today, we are excited to announce

Read the full post »Network troubleshooting in Slack made easy with Globalping

With about 20 million active users, Slack is one of the most popular digital communication tools, especially for tech industry teams. It's an invaluable platform for collaborative work, idea sharing, and issue troubleshooting, bringing teams together regardless of location.

Thanks

Read the full post »Globalping goes live! A community-powered global network testing platform

We're happy to announce that our new open-source project, Globalping, is officially launched and available to everybody.

Test global latency, ping from any corner of the globe, troubleshoot routing issues, and even investigate censorship worldwide – all completely free.